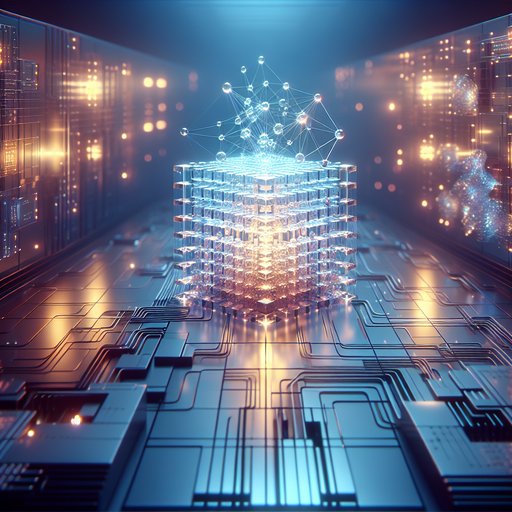

Quantum computing has evolved from a provocative idea in theoretical physics to a globally coordinated engineering effort, with laboratories and companies racing to build machines that exploit superposition and entanglement. Unlike classical processors that flip bits through irreversible logic, quantum devices manipulate wavefunctions with delicate, reversible operations that harness interference to reveal answers. This shift is not a faster version of today’s computing; it is a different model that excels at particular classes of problems, notably cryptanalysis and the simulation of quantum matter. Progress is tangible—larger qubit arrays, better control electronics, and maturing software stacks—but the field is still constrained by noise and the overhead of error correction. Understanding what quantum computers can and cannot do today is essential to charting realistic timelines for secure cryptography and scientific discovery.

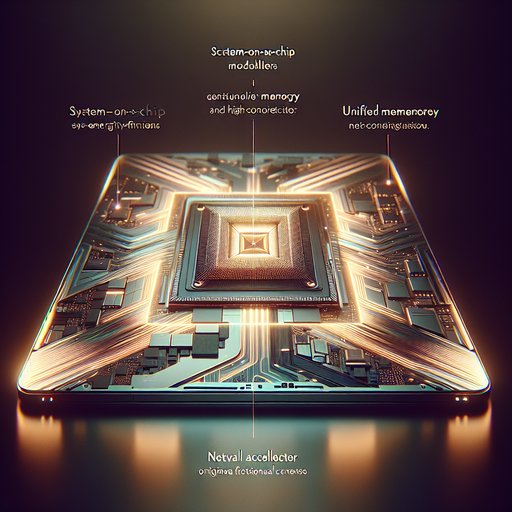

The contest among Intel’s x86 CPUs, ARM-based processors, and AMD’s RDNA GPUs is not a simple horse race; it is a clash of design philosophies that now meet at the same bottleneck: energy. Each camp optimizes different trade-offs—x86 for legacy performance and broad software compatibility, ARM for scalable efficiency and system integration, and RDNA for massively parallel graphics and emerging AI features within strict power budgets. As form factors converge and workloads diversify—from cloud-native microservices and AI inference to high-refresh gaming and thin-and-light laptops—these approaches increasingly intersect in shared systems. Understanding how they differ, and where they overlap, explains why performance no longer stands alone and why performance per watt has become the defining metric of modern computing.

Graphics processors began life as helpers to the CPU, moving pixels across the screen and accelerating windowed desktops. Over three decades, careful architectural changes and a maturing software stack turned them into the dominant parallel compute engines of our time. NVIDIA’s CUDA platform unlocked general-purpose programming at scale, and deep learning quickly found a natural home on this throughput-oriented hardware. At the same time, cryptocurrency mining exposed both the raw performance and the market volatility that massive parallelism can unleash. Tracing this path illuminates how a once-specialized peripheral became central to scientific discovery, modern AI, and even financial systems.

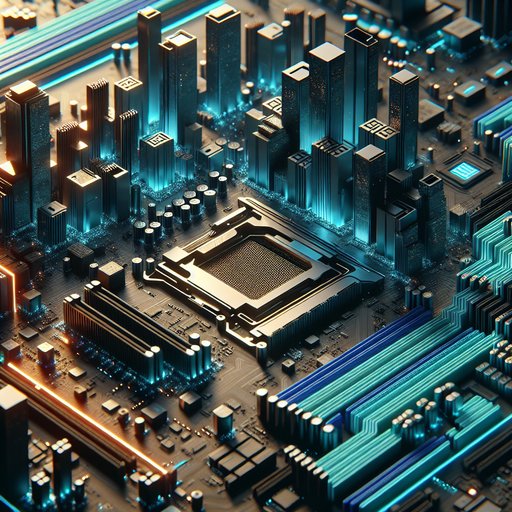

Modern motherboards have transformed from simple host platforms into dense, high-speed backplanes that quietly reconcile conflicting requirements: ever-faster I/O like PCIe 5.0, soaring transient power demands from CPUs and GPUs, and the need to integrate and interoperate with a sprawl of component standards. This evolution reflects decades of accumulated engineering discipline across signal integrity, power delivery, firmware, and mechanical design. Examining how boards reached today’s complexity explains why form factors look familiar while the underlying technology bears little resemblance to the ATX designs of the 1990s, and why incremental user-facing features mask sweeping architectural changes beneath the heatsinks and shrouds.