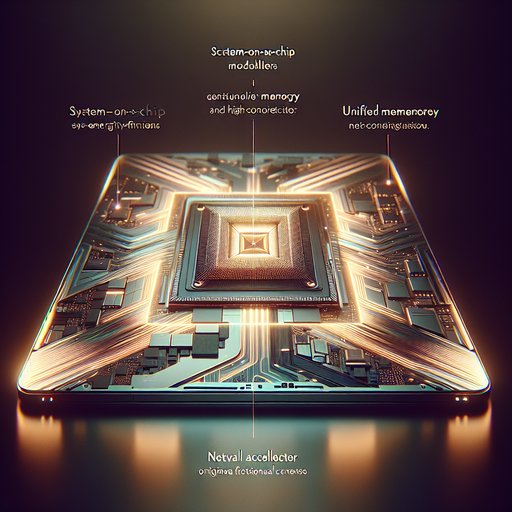

Apple’s transition from Intel x86 to its own ARM-based Apple Silicon is one of the most consequential shifts in personal computing since the move to multi-core laptops. Announced in 2020 and executed at consumer scale within months, the change brought smartphone-style system-on-chip design, unified memory, and machine learning accelerators into mainstream notebooks. The result is a new performance-per-watt baseline that has forced rivals to revisit assumptions about thermals, battery life, and how much hardware specialization belongs in a portable computer. By controlling silicon, system, and software together, Apple has reframed what users can reasonably expect from a laptop and challenged the conventions that defined the PC era for decades.

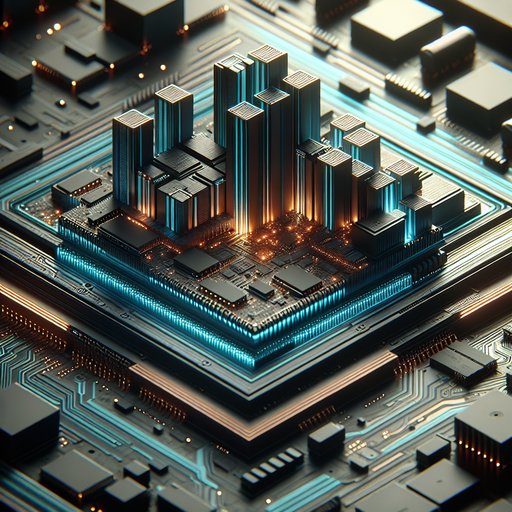

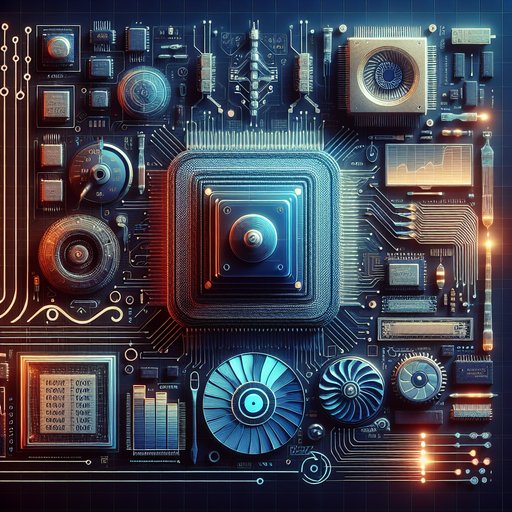

Motherboards have transformed from simple interconnects into high-speed, high-power platforms that orchestrate dozens of standards without compromising stability. The push for PCIe 5.0 bandwidth, multi-hundred–ampere CPU transients, and a tangle of modern I/O—USB4, Thunderbolt, NVMe, Wi‑Fi, and legacy interfaces—has forced sweeping changes in board layout, materials, firmware, and validation. What used to be a question of slot count is now a careful exercise in signal integrity budgeting, power delivery engineering, and standards negotiation. Understanding how motherboards evolved to meet these demands reveals the quiet, meticulous work that enables today’s CPUs, GPUs, and SSDs to reach their potential while still welcoming older devices.

How we measure computer performance has evolved from counting instructions and floating-point operations to profiling entire user experiences. Early metrics like MIPS and FLOPS offered tidy numbers, but they often misrepresented real workloads where memory, I/O, and software behavior dominate. Modern benchmarks span SPEC suites, database transactions, browser responsiveness, and gaming frame times, reflecting a landscape where CPUs, GPUs, storage, and networks interact. Understanding why traditional metrics sometimes fail—and how newer methods address those gaps—helps engineers and users choose systems that perform well in practice, not just on paper.

Operating systems define how humans and hardware cooperate, and the path from early Unix to today’s macOS, Linux distributions, and Windows reveals how design philosophies mold that cooperation. Unix introduced portability, text-first tooling, and process isolation that still anchor modern software practice. macOS channels Unix heritage through a carefully integrated desktop and tight hardware-software coupling, Linux turns the Unix ethos into a global, modular ecosystem, and Windows optimizes for broad compatibility and cohesive application frameworks. Tracing these choices clarifies why terminals feel familiar across platforms, why software installs differently on each, and why security hardening has converged despite divergent histories. The story is less a lineage than a dialogue: common ideas refined under different constraints, producing distinct user experiences and system architectures that continue to influence how we build, deploy, and secure software at every scale.