AI’s recent leaps didn’t spring from software alone; they were coaxed from silicon that stopped pretending to be a general-purpose computer. Graphics processors learned a new grammar for linear algebra. Data centers stitched accelerators together like organ systems. And now neuromorphic hardware—machines that compute with spikes and timing instead of clocks and frames—edges from research into prototypes that see, react, and conserve power with an animal’s thrift. The result is not just faster models, but a change in where intelligence lives: less in cloud fortresses and more in the hands, wheels, wings, and sensors that encounter the physical world first.

The story begins in a cramped lab filled with the whine of cooling fans, where a pair of gaming graphics cards once became an unlikely engine for a breakthrough. In 2012, a deep neural network trained on off-the-shelf GPUs jolted computer vision out of its long plateau, and the field never looked back. Programmers who had spent years feeding CPUs a diet of loops and branches retooled their minds to think in matrices and kernels. Toolchains hardened, CUDA became a common tongue, and the idea of a chip optimized for one kind of math—dense linear algebra—stopped sounding like a straitjacket and more like an invitation.

Momentum shifted from labs to entire buildings. Racks of accelerators learned to act as one, linked by fat interconnects that shuttle tensors as readily as thoughts. Memory stopped being a bottleneck hauled across motherboards and became a stack perched millimeters from compute, its bandwidth measured less by theory than by the thickness of copper in a package. Schedulers juggled thousands of tiny tasks so a transformer didn’t die of starvation while waiting for its next batch.

The outline of the future sharpened: if AI is to think in real time—on streets, factory floors, in an exoskeleton—the silicon must be built for that tempo. Specialization gathered names and mascots. Google’s tensor chips were tuned for the matrix dance of training and inference in sprawling pods. Microsoft threaded field‑programmable logic through its servers to make search and security react in microseconds.

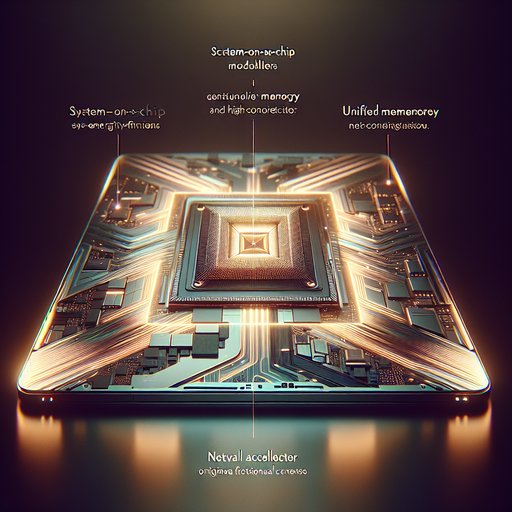

Apple pulled a neural engine into the phone’s beating heart so that photos, speech, and biometrics could stay private and feel instantaneous. The pattern felt less like a war of speeds and more like a geography lesson: different forms of intelligence prefer different terrain, and the map of compute diversified accordingly. Precision itself became negotiable. Where researchers once insisted on 32 or 64 bits, hardware designers taught models to thrive on less—first half precision, then bfloat, then formats that fit an exponential’s curve into a narrower lane.

Tensor cores and systolic arrays found rhythm in regularity, while compilers pruned awkward branches and exploited sparsity the way a sculptor finds a figure inside stone. Work that had taken weeks collapsed into days, then hours. The gain wasn’t only performance; it was legitimacy for a credo that would shape the next experiments: co‑design the algorithm with the substrate that will breathe life into it. The chips themselves began to look audacious.

Some outfits stitched dies together into chiplets and linked them across silicon interposers, turning what used to be a motherboard into a single organism. Others went further and made a whole wafer act as one device—no reticle walls, no awkward journeys off chip—so an ocean of compute could sit beside a sea of on‑wafer memory. Network fabrics grew into backplanes that treat dozens of accelerators as a single node. In these rooms, a researcher can point a cavernous model at data and watch it learn faster than the last time, not because the math is different, but because the runway is longer and smoother.

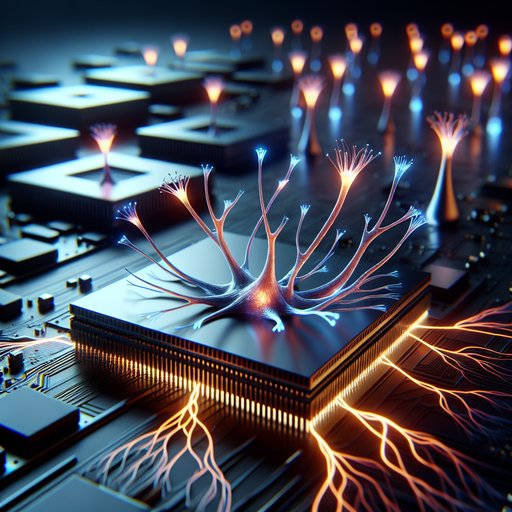

Then, another branch of the family tree shook itself awake. Neuromorphic chips—sketches of cortex drawn in CMOS—listen in spikes and speak in precise timings. IBM’s early experiments showed that a million software neurons can inhabit a postage‑stamp die without gulps of power. Intel’s research platforms pushed the idea of adaptation onto the chip, so that networks don’t just execute learned behavior; they also change as they sense.

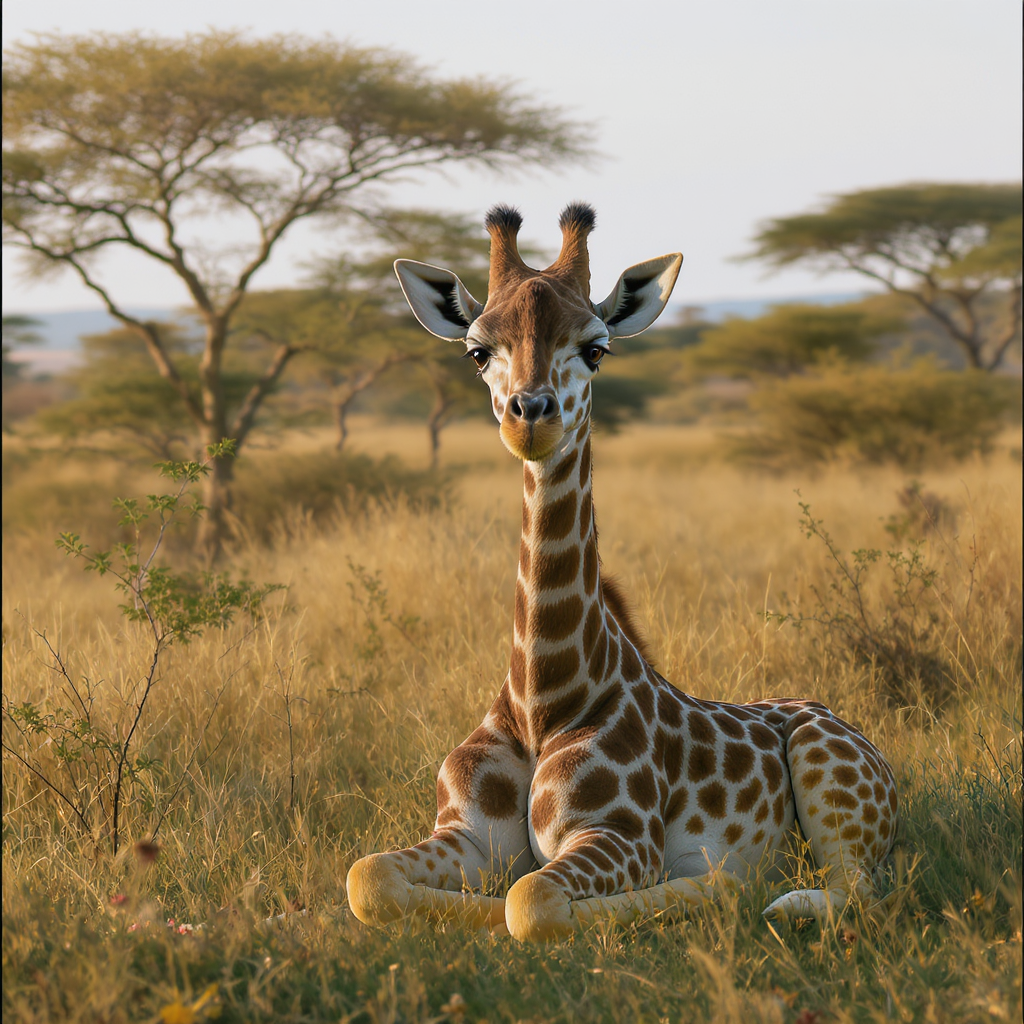

In Manchester, a machine knitted from a swarm of simple cores modeled spiking networks at biological time scales. None of these systems aims to beat a GPU at multiplying dense matrices. They are after a different prize: moving through the world with grace on a whisper of energy. You can glimpse that prize in devices that don’t wait for frames.

Event‑based cameras from European startups send only the pixels that change, like a retina piping impulses straight to the optic nerve. Paired with spiking processors, they steer palm‑sized drones between branches without melting their batteries, or steady robotic hands as they roll a seed between fingertips. Tactile skins report pressure transients instead of periodic scans, letting grippers hold ripe fruit the way a child does—by listening to micro‑slips rather than calculating an average. The loop between sensing and acting shrinks, and a robot’s behavior starts to resemble reflexes more than deliberation.

Analog and in‑memory computing adds another subplot. In labs, engineers coax arrays of resistive devices to perform the core operation of deep learning—multiply and accumulate—by letting currents sum where the weights live. It looks like a throwback to slide rules and op‑amps, yet the effect is modern: drastically less traffic between memory and compute. The components drift and demand calibration.

Temperature nudges bits. But the promise persists: a model that trains or fine‑tunes inside the very fabric that stores it, sipping power where digital machines would gulp. Marry that with digital control, and the line between storage and thought softens. All of this power creates its own weather.

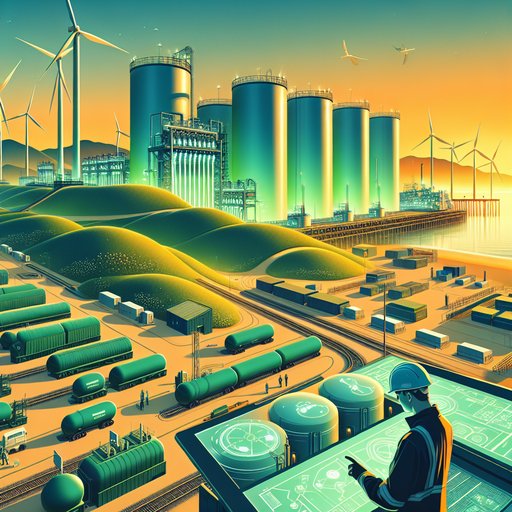

Data centers negotiate electrical diets with utilities; ten megawatts here, then twenty. Foundries reserve calendar quarters for advanced packaging because demand is as scarce as it is holy. Toolchains from compilers to profilers become gatekeepers of what is even imaginable, deciding which model architectures harvest a chip’s strengths and which starve on its quirks. The geopolitics of lithography and substrate supply line up behind research roadmaps, and sustainability isn’t a press release but the difference between a city that keeps its lights and one that flickers.

Yet the horizon refuses to settle into a single silhouette. In one direction, ever‑denser accelerators pull more intelligence into shared clouds where models can be trained on collective memory and rented by the millisecond. In another, neuromorphic and in‑sensor computing push cognition outward, into prosthetics that adjust as a runner’s gait changes, into hearing aids that follow the voice you care about, into factory cells that drift less because they feel the torque instead of sampling it. Between them lies a question that history keeps asking of new mediums: when the substrate changes the art, what new forms will be possible, and what old assumptions will we have to unlearn?

We are relearning that performance, in the end, is not only a higher number on a chart. It is a sensory latency low enough that a machine can share our timing. It is a power budget gentle enough that intelligence can live where the work is. It is a hardware–software handshake honest enough that each informs the other without pretense.

Specialized chips and neuromorphic hardware are not a detour from generality; they are a reminder that general intelligence may need many specific bodies. The next boundary won’t be crossed in theory alone, but in silicon that thinks with us, at our pace, in the places we care most about.