How we measure computer performance has evolved from counting instructions and floating-point operations to profiling entire user experiences. Early metrics like MIPS and FLOPS offered tidy numbers, but they often misrepresented real workloads where memory, I/O, and software behavior dominate. Modern benchmarks span SPEC suites, database transactions, browser responsiveness, and gaming frame times, reflecting a landscape where CPUs, GPUs, storage, and networks interact. Understanding why traditional metrics sometimes fail—and how newer methods address those gaps—helps engineers and users choose systems that perform well in practice, not just on paper.

Performance matters because computing is now defined by responsiveness, efficiency, and scale as much as by raw speed. A laptop that compiles code quickly but stutters during video calls feels slow, and a server that shines in synthetic benchmarks may miss service-level objectives under mixed traffic. The shift from single, homogeneous workloads to heterogeneous, concurrent tasks exposes limits in one-number summaries. Measuring what users actually experience requires metrics that reflect the whole system, not just a chip’s theoretical peak.

MIPS, or millions of instructions per second, once promised a simple yardstick, but instructions vary dramatically in complexity and usefulness across architectures. A program dominated by simple integer operations can inflate MIPS while doing less real work than a different program with fewer, heavier instructions. Dhrystone-style tests boosted headline MIPS by encouraging compilers to optimize away meaningful computation, revealing how easily synthetic loops diverge from practical software. As superscalar and out-of-order designs arrived, the gap widened between counted instructions and the true throughput of useful work.

FLOPS quantify floating-point throughput and remain vital in scientific and engineering computing, especially for GPUs. The LINPACK benchmark popularized by the TOP500 list measures dense linear algebra, but it overstates performance for memory- and communication-bound applications. Complementary suites like HPCG emphasize memory access patterns and interconnect behavior, offering a more realistic view of high-performance systems. In machine learning, MLPerf evaluates both training and inference across models and hardware, reflecting that mixed precision, tensor units, and software stacks can matter as much as raw FLOPS.

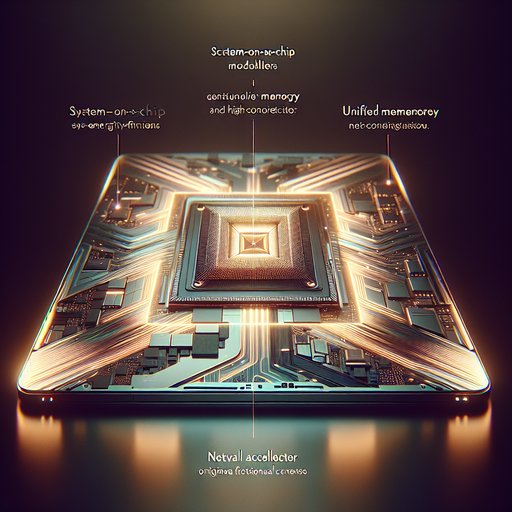

System-level benchmarks emerged to bridge the gap between microbenchmarks and real workloads. SPEC CPU measures single-thread speed and throughput across standardized integer and floating-point programs, while SPECpower introduces energy as a first-class metric. Transactional benchmarks like TPC-C and analytical counterparts such as TPC-DS reflect database behavior under concurrency, logging, and complex queries. General suites like PCMark and cross-platform tests like Geekbench and Cinebench add breadth, but differences in compilers, libraries, and tuning flags still shape results and require transparent methodologies.

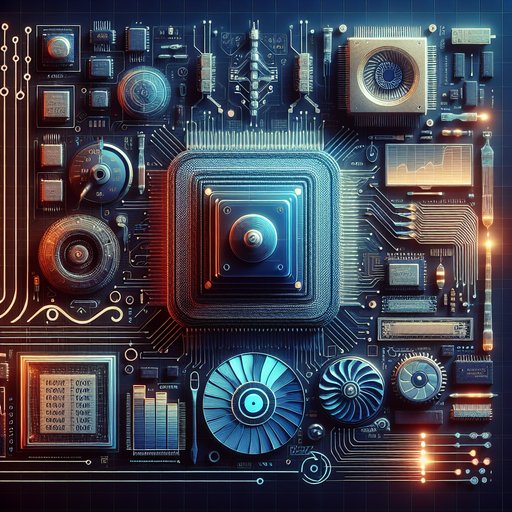

Microarchitectural realities often dominate performance more than nominal metrics indicate. Instructions per cycle (IPC), cache hit rates, branch prediction accuracy, and memory latency can bottleneck workloads long before ALUs or tensor cores reach their peaks. The roofline model shows how arithmetic intensity and memory bandwidth constrain attainable performance, explaining why raising clock speed or FLOPS alone may not move the needle. Power limits and thermal throttling further bind sustained throughput, so performance per watt and steady-state behavior matter as much as short bursts.

Gaming benchmarks illustrate the shift from average speed to experienced smoothness. Frames per second gives a coarse picture, but frame-time distributions and percentiles (such as 1% and 0.1% lows) surface stutter that averages conceal. Modern engines juggle CPU simulation, GPU rendering, shader compilation, and asset streaming, so performance depends on APIs, drivers, and storage as much as on silicon. Resolution and settings change whether a title is CPU- or GPU-bound, and technologies like shader precompilation and variable rate shading can alter results more than clock speeds suggest.

Traditional metrics fall short when systems are heterogeneous, software-driven, and sensitive to latency. A database with great average throughput can still miss deadlines due to p99 tail latency from garbage collection, I/O hiccups, or noisy neighbors in the cloud. A browser benchmark like Speedometer measures interactive responsiveness that neither MIPS nor FLOPS capture, highlighting JavaScript engines, JIT compilers, and DOM performance. In mobile devices, dynamic voltage and frequency scaling, background tasks, and thermal envelopes shape real responsiveness, meaning identical chips can feel different depending on firmware and cooling.

Better evaluation starts by aligning metrics with goals and measuring full-stack behavior. Representative workloads, repeatable setups, and transparent tuning reduce the gap between benchmark scores and production outcomes. Triangulating with microbenchmarks, application benchmarks, and observability data—such as performance counters and tail latency—yields a more faithful picture. The result is performance that users actually notice: consistent frame pacing, stable service latencies, and efficient power use rather than just a bigger number on a spec sheet.