Apple’s transition from Intel x86 to its own ARM-based Apple Silicon is one of the most consequential shifts in personal computing since the move to multi-core laptops. Announced in 2020 and executed at consumer scale within months, the change brought smartphone-style system-on-chip design, unified memory, and machine learning accelerators into mainstream notebooks. The result is a new performance-per-watt baseline that has forced rivals to revisit assumptions about thermals, battery life, and how much hardware specialization belongs in a portable computer. By controlling silicon, system, and software together, Apple has reframed what users can reasonably expect from a laptop and challenged the conventions that defined the PC era for decades.

Examining Apple’s Intel-to-ARM migration is relevant because it captures a rare architectural pivot executed in public at a mature market’s center. Laptops had converged on predictable trade-offs—thin-and-light devices sacrificed sustained performance, while powerful machines accepted noise and heat. Apple’s M‑series chips shifted that frontier by emphasizing performance per watt and tight hardware–software co-design, making high performance viable within thinner, quieter enclosures. The move also reset battery life expectations and reintroduced the idea that specialized on‑chip accelerators should be first-class citizens in general-purpose systems.

Apple announced the transition at WWDC 2020, provided developers with an ARM-based Mac mini–style kit, and offered Rosetta 2 to translate existing x86‑64 apps while Universal 2 binaries enabled native ARM64 builds. The first consumer machines with the M1—MacBook Air, 13‑inch MacBook Pro, and Mac mini—arrived that November. Beyond performance, the strategic motivation was control: Apple could align product roadmaps with TSMC process nodes, tailor microarchitectural choices to macOS, and avoid dependency on Intel’s cadence. The company committed to converting the Mac lineup within two years and largely hit that target with subsequent desktops and laptops.

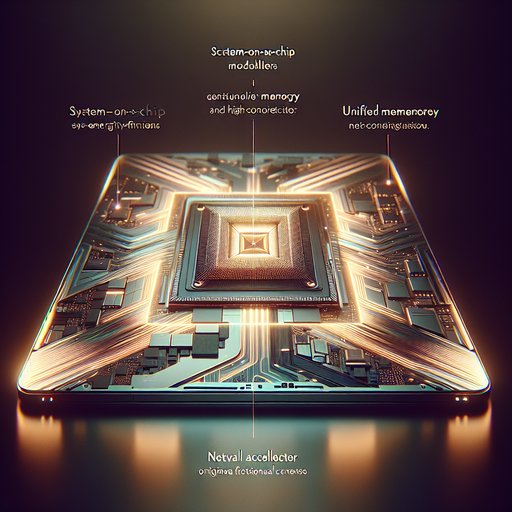

Technically, M1 put several bets on the table at once: big.LITTLE‑style heterogeneous CPU cores, an integrated GPU tuned for Metal, a Neural Engine for on‑device ML, and a unified memory architecture (UMA) with high‑bandwidth LPDDR on package. UMA shrinks data movement—CPU, GPU, and accelerators address the same memory pool—reducing copies and latency while saving power. That design helps small form factors: the fanless M1 MacBook Air could sustain workloads that previously demanded active cooling, while offering markedly longer standby and video playback. Media engines for H.264/HEVC (and later ProRes) offload codecs from the CPU and GPU, translating into smooth editing and efficient exports without external GPUs.

Scaling up with M1 Pro, M1 Max, and M1 Ultra showed that Apple’s approach was not confined to ultraportables. Wider memory interfaces delivered hundreds of gigabytes per second of bandwidth, feeding larger GPUs and more CPU cores without departing from UMA principles. The M1 Max introduced dedicated ProRes encode/decode blocks that compressed professional workloads into laptop-friendly thermal envelopes. M1 Ultra used a high-bandwidth die‑to‑die interconnect to present two Max dies as a single SoC to software, preserving the unified memory model at workstation scale.

Successive generations extended this template rather than abandoning it. M2 improved efficiency and peak throughput while raising unified memory ceilings; M2 Ultra pushed UMA capacity into the high‑end desktop range for memory‑hungry content creation and simulation. With M3, Apple moved to a 3‑nanometer process and added GPU features such as hardware ray tracing, mesh shading, and a memory allocation scheme Apple calls Dynamic Caching to improve utilization. These updates were accompanied by further media engine refinements, maintaining a pattern: generational gains arrive not only from higher clocks or more cores, but from better use of silicon around the CPU through accelerators and bandwidth.

Software continuity was the other half of the story. Rosetta 2’s dynamic translation enabled most existing Mac apps to run with surprisingly little friction, buying time for native ARM64 ports. Major suites—browsers, productivity tools, creative applications, developer toolchains—delivered native versions, and open‑source ecosystems adapted with multi‑architecture packages and containers. While some workflows changed—Boot Camp for Windows disappeared, and virtualization targets shifted toward ARM guests—the bulk of day‑to‑day computing on a Mac remained familiar, now with faster app launches, instant wake, and longer unplugged sessions.

The industry reaction underscores how Apple’s choices challenged traditional PC architecture. Intel’s Alder Lake and later processors adopted hybrid P‑cores and E‑cores and leaned on Windows 11 scheduler updates to improve responsiveness and efficiency. Qualcomm’s Snapdragon X series for Windows on ARM brought high‑efficiency SoCs and NPUs to thin‑and‑light PCs, and Microsoft’s Copilot+ PC initiative set explicit NPU performance targets, echoing Apple’s early investment in on‑device ML. AMD and Intel added dedicated media and AI blocks and embraced on‑package LPDDR in premium laptops, reflecting a shift from socketed, modular designs toward tightly integrated subsystems to win on thermals and battery life.

Apple Silicon also reopens debates about what “PC architecture” should optimize for. Unified memory and in‑package DRAM reduce upgradeability but deliver predictable low‑latency bandwidth and fewer software-visible boundaries, simplifying certain classes of algorithms. Specialized engines shift work from general-purpose cores, cutting power and heat for video, vision, and ML tasks users run daily. For developers, the cost is learning to target these blocks—Metal for graphics, Core ML for inference, and frameworks that minimize data copies—yet the reward is performance that scales with efficiency rather than fan speed.

Taken together, Apple’s transition demonstrates that laptop design no longer has to trade sustained performance against silence and battery life as starkly as it once did. By pairing an ARM ISA with aggressive SoC integration and OS‑level scheduling tuned for heterogeneity, Apple raised the baseline for what a premium portable can deliver. Competitors have responded with their own hybrid cores, NPUs, and media engines, ensuring that the benefits propagate across platforms. The PC is not becoming a phone; rather, it is adopting the parts of phone-era engineering that demonstrably improve everyday computing.